Multi-modal Avatar Experience and automated Neurocognitive Assessments at TTC 2019

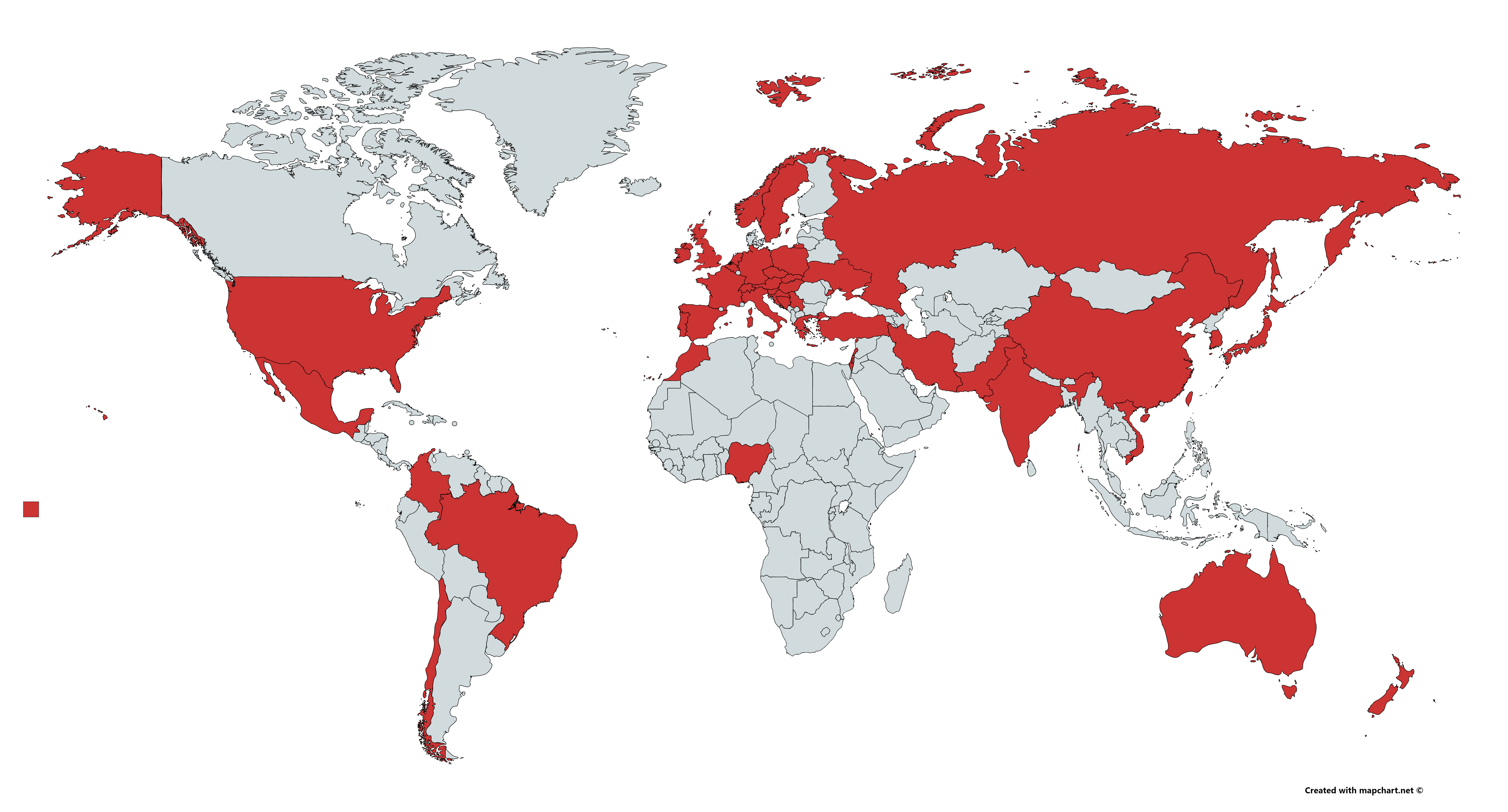

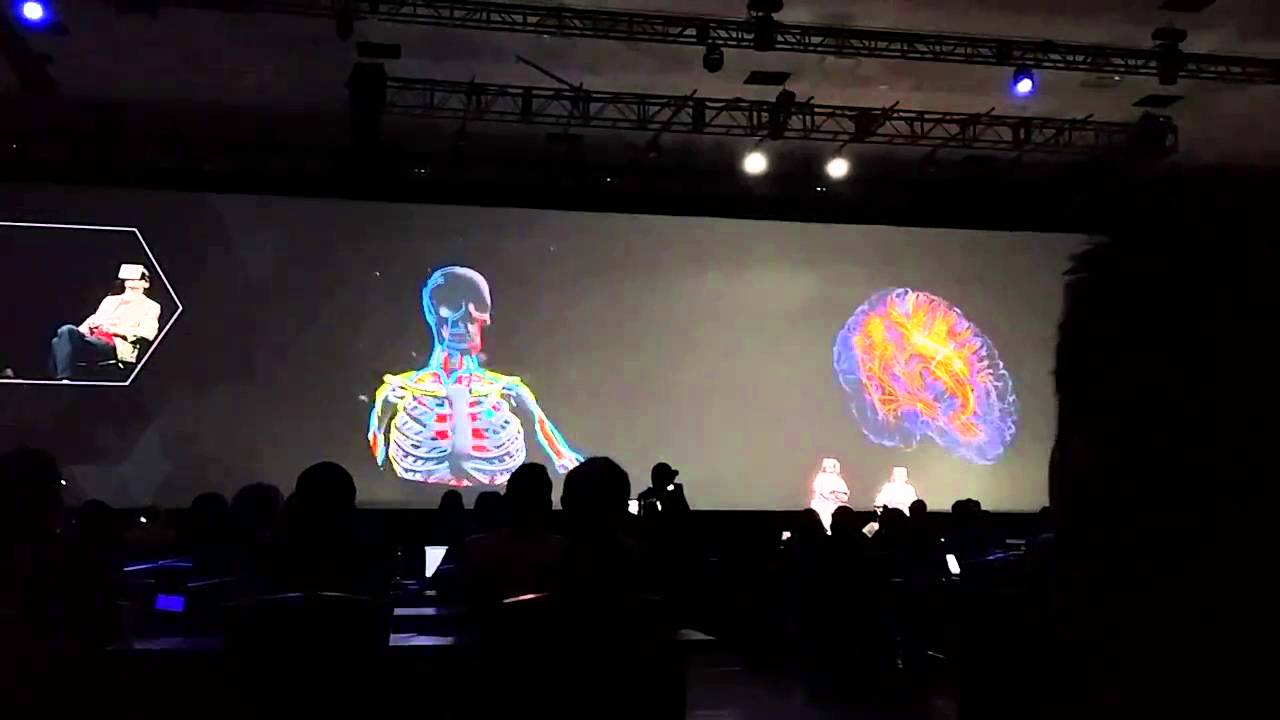

At the 2019 Transformative Technologies Conference in Palo Alto, California, attendees were able to experience the multi-modal Avatar Experience, whereby they could fly around and through a 3D representation of their body and brain while visualizing their own brain and heart activity in a virtual avatar and control elements in that virtual world using only their mind, as well as receiving an auto-generated interactive report detailing changes in their neurocognitive states during the experience.

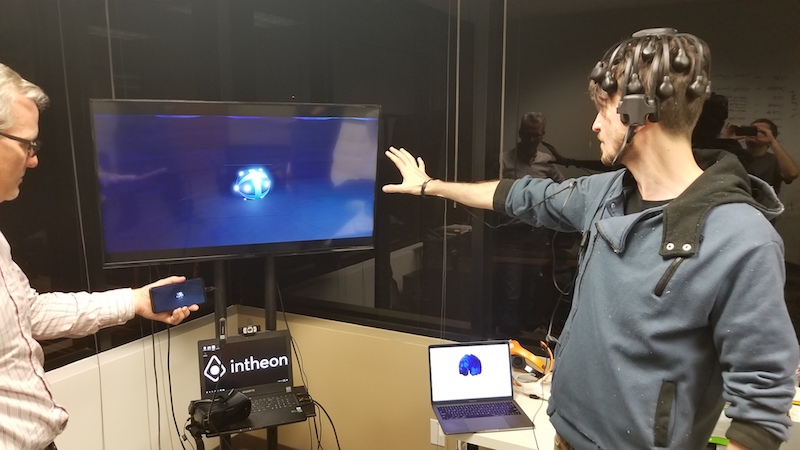

In addition to displaying real-time brain network and cardiac (heart rate) activity inside the 3D body avatar, muscle activity was also captured and used by attendees to activate a flying orb inside the Avatar virtual world, which they could then control through a brain-computer interface by changing activity in brain networks associated with attention and meditation states (closed-loop neurofeedback). Brain/Heart/Muscle activity was captured using a CGX Quick-20r headset, and cortex activity and brain connectivity (using granger causality) was computed in real time using Neuropype to power the Avatar game-like application (built with Unity) for real-time 3D visualization.

At the end of each session, a Neuroscale Insights report was generated showing changes in the user's neurometrics, including workload and relaxation, throughout the session as well as the change in brain activity in various frequency bands within different brain regions, displayed on 3D cortex models.

Attendee at TTC 2019 manipulates an orb with his mind while watching his own brain activity and connectivity, in real-time.

Sample plot from the Neuroscale Insights report auto-generated at the end of each session, showing power spectral density in the theta band during the session relative to the pre-session baseline (as a z-score, masked to show changes greater than 2.5 standard deviations), mapped onto a fully rotatable cortex plot and two hemisphere plots.