The Latest News from Intheon

Neuropype 2023: Our Biggest Release Yet

It's time for our next major release (2023) to our NeuroPype signal processing suite! This is our biggest release to date, with major new features and literally hundreds of new nodes that continue to expand NeuroPype's capabilities and use cases.

A major new component is the ability to create and run deep neural networks inside NeuroPype pipelines, with convolution layers, dense layers, normalizations, activations, optimizers, schedules, cross-validation, training, and inference. Comprehensive deep learning models, such as EEGNET can now be expressed as a NeuroPype pipeline. You can now create end-to-end pipelines from data curation, preprocessing and feature extraction, to deep learning predictions and cross-validation. These pipelines can also process multiple modalities (i.e., EEG, Gaze, other biosignal data) and incorporate them into the same model.

Neuropype 2022 is out!

Another year has started and it's time for another major release (2022) of our Neuropype signal processing suite! This release includes our Experiment Recorder application for cognitive task presentation and data capture (which uses Neuropype on the backend), which makes running experiments and collecting data from LSL-compatible devices an easy affair. Also included is a new control panel for easily launching / viewing / stopping / starting multiple pipelines. That's not to mention over 25 new nodes (including several new features for processing fNIRS data), performance enhancements,

Research on flow and the neurophysiology of VR flight with JUMP

We're excited to be partnering on a groundbreaking research project led by Jump, together with the Flow Research Collective and leading neuroscientists, including Dr. Richard Huskey (UC Davis), Dr. Adam Gazzaley (UCSF, Neuroscape), Steven Kotler (Executive Director, Flow Research Collective), and Dr Michael Mannino (CSO, Flow Research Collective), to explore the effects of the JUMP experience and its ability to induce a state of flow.

Exploring brain activity during cycling with Outride

Outride, a non-profit organization dedicated to improving the lives of youth through cycling, hosted their 2022 Outride Summit event, which, in addition to presenting research on the positive impacts of physical exercise on the brain and mental health, also included an interactive demo where attendees could view their brain activity in real time while cycling. Attendees were fitted with an EEG headset, and Neuropype was used to compute cortex activity and connectivity in realtime displayed on our 3D "avatar experience" visualization.

Neuropype 2021 released!

Happy new year, and we're especially happy to announce the launch of the 2021 Neuropype Suite release. This major release includes nearly 50 new nodes, including a new node package, NIRS, with a collection of nodes for fNIRS data, as well as new nodes for signal quality diagnostics, artifact regression, incremental signal whitening, covariance metrics classification, network efficiency, MIDI output, linear trend, ...

2021 BCI Award Finalists announced

We are excited to see the announcement of 12 very compelling projects as this year’s finalists for the annual BCI Award.

The finalists will each deliver presentations on the 19th of October at the 2021 IEEE SMC conference and virtually via Zoom, after which the winners will be announced. Register for the virtual presentations with this link.

Intheon is proud to be a sponsor of the 2021 BCI Award and help recognize the innovative, hard work being contributed by researchers around the world, each helping make the world a better place through BCI technology!

Dissemination of EEG Technologies at BRAIN Initiative Workshop

Tim Mullen, Intheon CEO, gave the following presentation at the BRAIN Initiative Workshop: Transformative Non-Invasive Imaging Technologies, held March 9-11, 2011. The goal of this workshop is to bring together neuroscientists, tool developers/engineers, and industry partners to discuss what new non-invasive functional imaging tools could be realized in the next five to ten years, their potential impact on human neuroscience research, and possible pathways forward for broader dissemination.

Intheon at TransTech 2020

This month, we made our (virtual) pilgrimage again to the Transformative Technologies Conference, joining over 1000 founders, investors, venture philanthropists, venture capitalists, and other innovators in the wellness tech space.There, we joined on stage 30+ of the most respected thought leaders addressing society’s biggest and most complex problems across wellness, productivity, mental health, etc., from Google VP Ivy Ross to WIRED founder Jane Metcalfe, to brief innovators and investors on our latest advances and where we are heading over the next year.

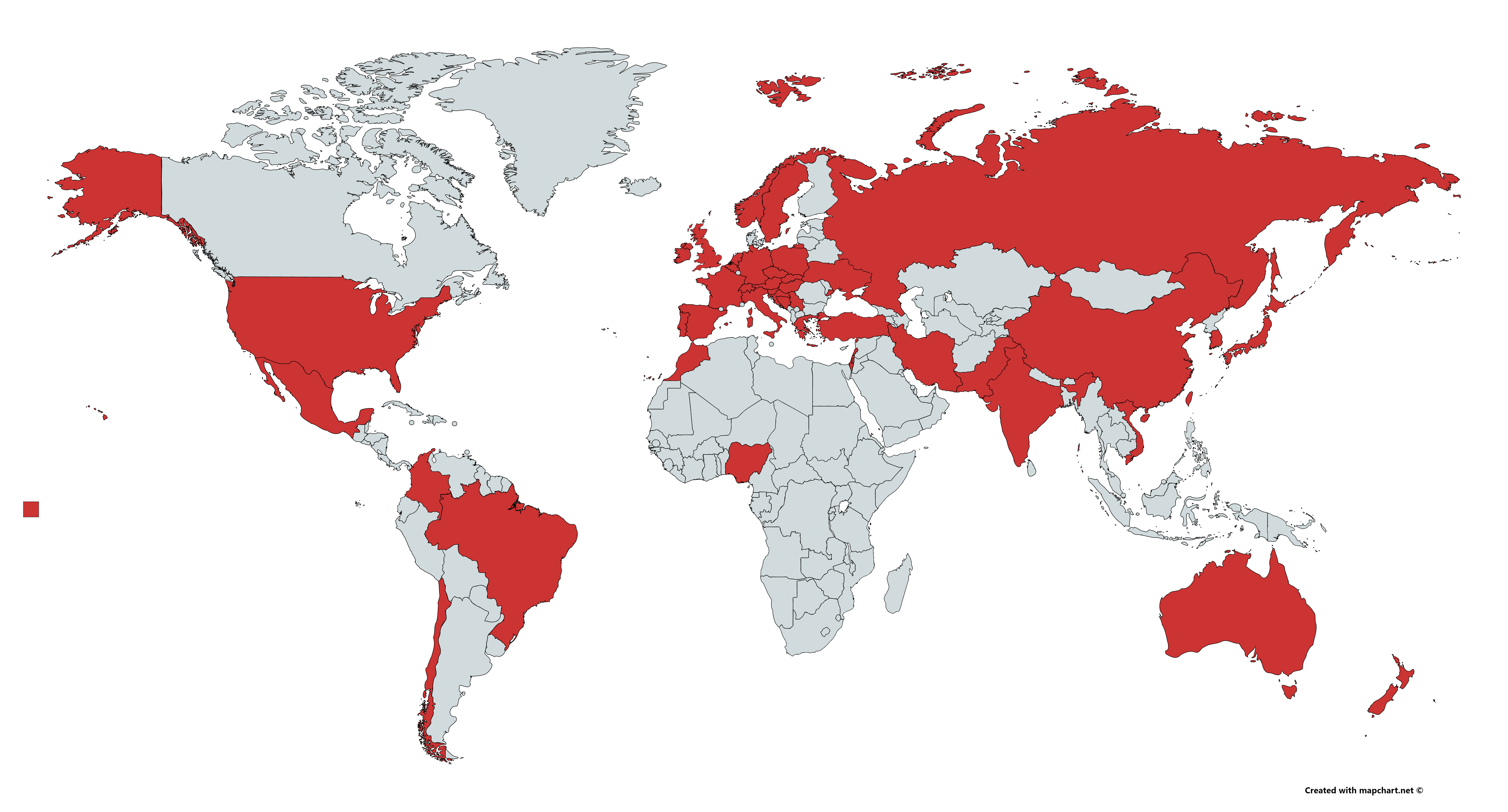

Neuropype Academic Edition around the world

The Neuropype Academic Edition is available free-of-charge for faculty and students at academic institutions, for use in non-commercial research. Ideal for hypothesis testing, real-time multi-modal signal processing and brain-computer interfacing, and recorded signal data analysis workflows. Apply today and turbocharge your research! (Multiple seat licenses for university labs available too!)

Working to fight COVID-19? Get a free Neuropype license.

If you're working on a project related to the fight against COVID-19 and could use a powerful tool for biosignal processing in your work, please contact us for a free Neuropype license. We already offer a free-of-charge Academic Edition license to faculty, students and researchers at academic institutions, so if you're one of those, you can already apply for a license today. However, we're extending the use of this free-of-charge license to those not at academic institutions but who are engaged in a project related to the global fight against COVID-19 and its impact.

Startup/Personal Edition Released

With the 2020 Neuropype Release we're also excited to announce a new low-cost edition specifically for early-stage startups and/or personal use. This full-featured extensible edition of Neuropype is being made available to individual researchers, hackers/makers, and anyone with an interest in processing biosignals and creating realtime BCIs, as well as small companies with an annual revenue (or have raised funds) of $200K or less. Perfect for product R&D and/or BCI projects. 30-day free trial included!

Neuropype 2020.0.0 is out!

We're delighted to announce the launch of the 2020 Neuropype Suite release. This major release includes nearly 50 new nodes, including a new node package, Gaze, with a collection of nodes for processing eye tracking signal data, as well as new nodes for removing artifacts, interpolating missing channels or missing data along an axis, spatio-spectral decomposition, CCA feature extraction, trial extraction based on trial metadata for ERP analysis, ...

Multi-modal Avatar Experience and automated Neurocognitive Assessments at TTC 2019

At the 2019 Transformative Technologies Conference in Palo Alto, California, attendees were able to experience the multi-modal Avatar Experience, whereby they could fly around and through a 3D representation of their body and brain while visualizing their own brain and heart activity in a virtual avatar and control elements in that virtual world using only their mind, as well as receiving an auto-generated interactive report detailing changes in their neurocognitive states during the experience.

New Neuroscale Insights features unveiled at TransTech 2019

Intheon is excited to participate again in the Transformative Technologies Conference 2019, where we demonstrated new features of the Neuroscale Insights platform which, combined with our Experiment Recorder software running on Neuropype, enable commercial and academic research teams to easily collect and process biosignal data, select from a suite of customizable experimental tasks for cognitive state assessment, and automatically generate full-featured interactive graphical reports presenting a wide range of cognitive and neurophysiological metrics and statistical analyses.

Brain fly-through Avatar Experience at the Brainmind Summit (Stanford)

The BrainMind Summit held at Stanford University on October 12-13, 2019 included an Experiential Neurolab showcasing new inventions, technology demonstrations and artistic exhibits. Intheon was invited to showcase a new experience which allowed Summit attendees to experience their own brain and body states representated within a 3D avatar.

2019 BR41N.io BCI Hackathon at IEEE SMC Bari

Congratulations to the prize winners of the 2019 BR41N.io BCI Hackathon at IEEE SMC in Bari, Italy! The prizes, including a $1000 cash prize from Intheon, were awarded to four diverse teams from a number of countries:

“Tools for Multi-modal Neuroscience Data Analysis and BCI” at 1st mbt Mobile EEG Workshop

mBrainTrain held their 1st International mbt Mobile EEG Workshop in Belgrade, Serbia bringing together pioneers in the application of mobile EEG analysis who shared best practices, tools, and ideas aimed at helping users conduct studies in real-world and ambulatory environments while saving time on otherwise complex and lengthy experimental setups. Dr. Tim Mullen gave a talk on “Tools for Multi-modal Neuroscience Data Analysis and Brain Computer Interfacing” at the workshop.

Intheon presents Middleware for the Mind at Brainmind Summit @ MIT

The BrainMind Summit hosted with MIT was held on May 4-5, 2019. Tim Mullen, Intheon CEO, gave a presentation which laid out Intheon's past, present and future, and how we are building Middleware for the Mind for both real-time and large-scale turnkey analysis and interpretation of data from a wide range of bio and neural sensor devices.

Watch the presentation below!

"Closing the chasm" in neuroscience at BrainTech 2019 + the Brain Gurion Hackathon

In March, Tim headed to Tel Aviv, Israel to give an invited presentation at Israel's premier international neurotechnology conference, BrainTech 2019, on "Closing the Chasm: Accelerating Translational Neuroscience Applications through Automated Biosignal Analysis in the Cloud".

Conversation with Allen Saakyan on the Simulation Series

At TTC'19, Tim sat down with Allen Saakyan from the awesome Simulation series for a conversation on Intheon's mission, the current and future state of neurotechnology; ethics, stewardship, and governance in exponential technologies; views on consciousness, and related topics. Check out the interview here.

Presenting & hosting workshops at the EEGLAB 2018 Workshop held at UCSD

We were delighted to participate in presenting at and/or hosting several sessions and workshops at the EEGLAB 2018 Workshop organized by the Swartz Center for Computational Neuroscience at the University of California San Diego on November 8-12.

Accelerating Scientific Research at TransTech 2018

BR41N.io Brain-Computer Interface hackathon at IEEE SMC Miyazaki 2018

In addition to presenting several papers at the IEEE International Conferences on Systems, Man and Cybernetics (SMC) 2018 in Miyazaki, Japan, held on October 7-10, Intheon is proud to be a sponsor of the Brain-Computer Interface (BCI) Hackathon at the conference.

Dr. Tim Mullen talks about the future of neurotechnology at TEDxSanDiego: Revelations

On July 28 Dr. Tim Mullen (CEO, Intheon) gave a talk at a TED Salon, hosted at the Salk Institute for Biological Studies, where he spoke about a future in which advanced neurotechnology is woven into the fabric of everyday life, a natural extension of Marc Weiser's (CTO, Xerox PARC) concept of "ubiquitous computing".

Upcoming presentation at ISS R&D Conference

We are excited to be participating in this year's ISS R&D Conference!

We'll be presenting recent research, in collaboration with the UND/NASA Human Spaceflight Laboratory, Fordham University, and Cognionics, evaluating new capabilities for assessing crewmember neural and cognitive performance during a 14-day simulated Lunar/Martian mission in the Inflatable Lunar/Martian Habitat Analog at the University of North Dakota.

Hyperbrain demonstration at BCI Asilomar

Intheon gave several presentations at the 7th International BCI Meeting, held in Asilomar, California, on May 21-25. In one of these, "From N=1 to N=Everyone: Scalable Technologies for Multi-Brain Computer Interfacing", Tim Mullen gave a live demonstration of a "hyperbrain": real-time computation of neural synchrony across groups of individuals, all done with mobile devices and NeuroScale.

Cognitive assessment research project at the NASA-UND Lunar/Martian Analog Habitat

We're excited to participate with the Human Spaceflight Laboratory at the University of North Dakota, Fordham University, and Cognionics, on a research project for Inflatable Lunar Martian Analog Habitat Mission V, testing the feasibility of using wearable EEG and specially designed cognitive assessment protocols to characterize and predict future changes in cognitive performance during Lunar/Martian missions.

Intheon a Featured Beta Startup at Collision 2018

Intheon was invited to be a Featured Beta Startup at the Collision tech conference held in New Orleans on May 1-3, where we showcased our NeuroPype desktop signal processing application and previewed the capabilities of our NeuroScale cloud platform. We received a lot of positive feedback and excitement from attendees about what we have been building and have in the works as we pioneer the first cloud-scalable platform for 'anytime, anywhere' neural state decoding!

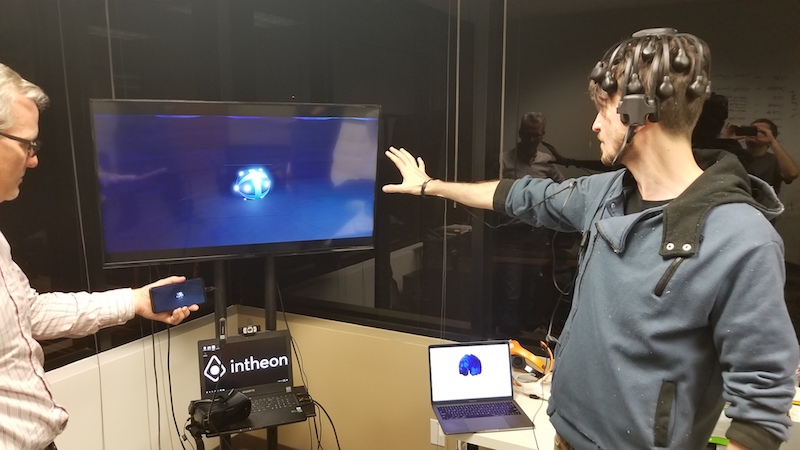

EEG/EMG/ECG-powered VR app over the cloud with NeuroScale

Having fun at the Intheon offices with a GearVR-capable application showing integration of heart, muscle/gesture, and cortically localized brain activity, processed in the cloud with NeuroScale, and powering an interactive mobile VR game rendered on a phone. While wearing a Cognionics Quick-30 headset for measuring EEG, EMG and ECG signals, we took turns activating or deactivating the orb using EMG, while levitating the orb with our EEG activity by focusing our attention. (Game dev by Tim Omernick.) One more step towards "anytime, anywhere" BCI applications! (click Read More to see video)

SMC Founders Keynote "From Research to Scientific Breakthroughs to Improving Lives of People"

Our CEO, Tim Mullen recently gave an invited Keynote talk discussing our mission to power the future of "anytime, anywhere" neurotechnology, at the Founders Keynote Session of the IEEE Systems, Man, and Cybernetics conference in Budapest, Hungary.

First demonstration of Real-Time Brain Mapping in a Web Browser

This clip shows the first demonstration of wireless dry EEG brain activity mapping and directed connectivity analysis with real-time interactive 3D visualization in a standard web browser on a smart phone. This represents an important step towards enabling powerful new pervasive applications of EEG, including live remote monitoring of brain activity (neurotelemetry) for clinical or consumer applications.

PC Magazine Article

Tim Mullen (CEO, Intheon) and Mike Chi (CTO, Cognionics Inc) with the first high-density (64-channel) dry wearable EEG system, the Cognionics HD-72.

Tim Mullen post-Keynote Discussion with IEEE CESoc TV

Dr. Tim Mullen gave a Keynote address on “Brain Computer Interfaces: Present and Future” at CES 2016 (IEEE International Conference on Consumer Electronics). In this video, he discusses our vision for pervasive brain-computer interfaces with IEEE CESoc TV.

Wireless Monitoring of a Car Driver’s Brain State on the Cloud

This video demonstrates a real-time wearable brain-computer interface (BCI) capable of detecting whether a car driver (here, Intheon CDO Nima Bigdely Shamlo) heard an unusual sound interspersed amongst regular sounds played over the car speakers. The is the first demonstration of a real-time, dry wireless EEG BCI system operating in a moving vehicle with real-time computation on the cloud (NeuroScale).

Real-Time Brain Monitoring on a Cell Phone Over NeuroScale

Intheon CTO Christian Kothe is in San Diego wearing a 21-channel Neuroelectrics Enobio EEG cap. The data is streamed live through the NeuroScale platform where we are removing artifacts and computing a real-time map of cortical brain activity and brain network connectivity (multivariate Granger causality). The resulting data is streamed to San Francisco where Intheon CEO, Dr. Tim Mullen, visualizes Christian’s 3D brain maps in real-time on his cell phone.

Planes and Trains…and Brains!

Here we show how a brain-computer interface — here a multiplayer, mobile brain-controlled game (“neurogame”) called Tractor Beam — can be powered by the NeuroScale cloud platform and used on planes and trains (or anywhere else you have Internet). Here Intheon team members (Mullen, McCoy, Bigdely-Shamlo, Ward) were on their way from San Diego to San Francisco, on May 5th 2015, to present at the NeuroGaming conference.

Epic Rhythm Experience With Mickey Hart and the Glass Brain at Mozart & the Mind 2014

Demonstrating the “Glass Brain” at Mozart & the Mind 2014 in an epic rhythm experience with Mickey Hart, Adam Gazzaley, Christine Stevens, Bill Walton, and 700 friends! The Glass Brain — powered by the Intheon team’s software — is the world’s first interactive, real-time, high-resolution visualization of an active human brain (cortical brain activity and connectivity), designed specifically for Virtual Reality. Here Intheon CEO Tim Mullen (right) is flying through the live brain of Grateful Dead drummer Mickey Hart (left) in Oculus VR. Mickey is wearing a 64-channel wearable EEG system developed by Cognionics Inc.

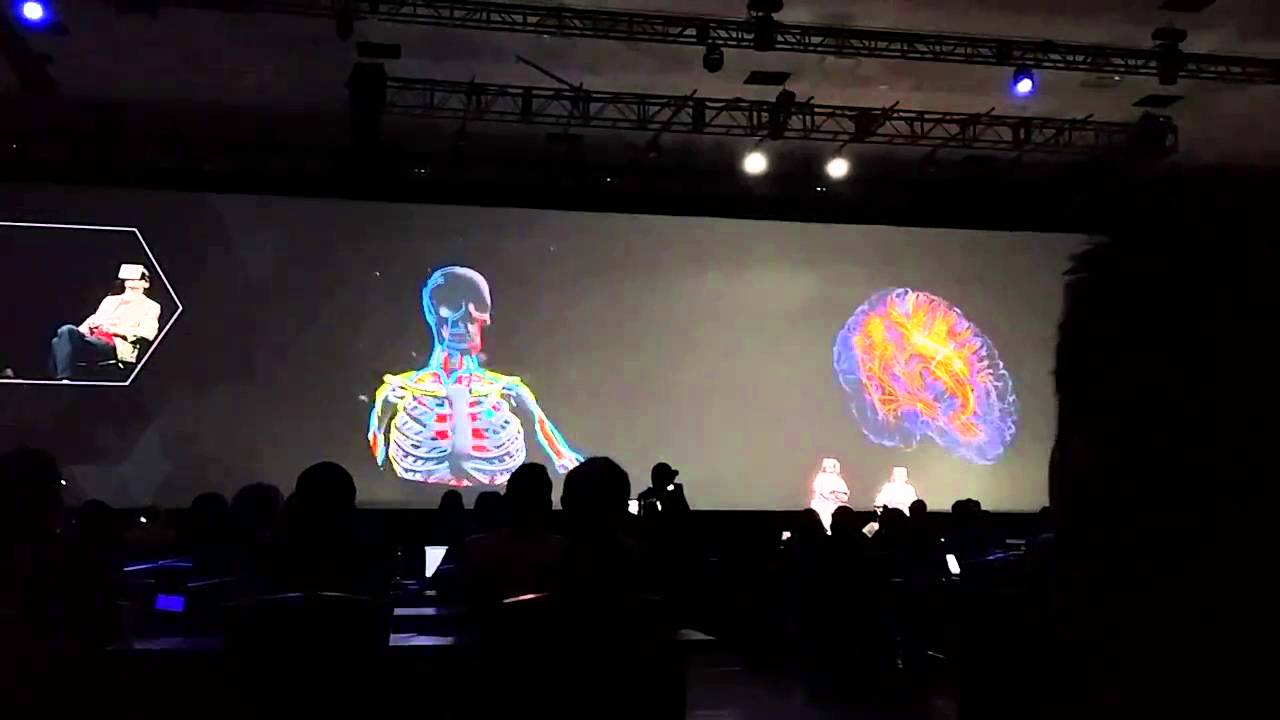

The Glass Brain @ GTC2014 Keynote

Demonstrating the “Glass Brain” at the GTC2014 Keynote with Adam Gazzaley. The Glass Brain — powered by the Intheon team’s software — is the world’s first interactive, real-time, high-resolution visualization of an active human brain (cortical brain activity and connectivity), designed specifically for Virtual Reality. Here Intheon CEO Tim Mullen (right) is flying through the live brain of Grateful Dead drummer Mickey Hart (left) in Oculus VR. Mickey is wearing a 64-channel wearable EEG system developed by Cognionics Inc. Visit http://intheon.io/projects/ for more details on the Glass Brain and other Intheon projects!